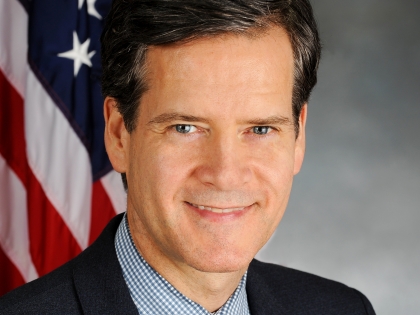

As the Anniversary of the January 6 Insurrection Approaches, Senator Brad Hoylman Introduces Bill to Hold Tech Companies Accountable for Promoting Vaccine Misinformation & Hate Speech on Social Media

December 27, 2021

NEW YORK— In the week before the anniversary of the notorious January 6 insurrection at the U.S. Capitol, and as vaccine hesitancy continues to fuel the Omicron variant, Senator Brad Hoylman (D/WFP-Manhattan) announced new legislation (S.7568) to hold social media platforms accountable for knowingly promoting disinformation, violent hate speech, and other unlawful content that could harm others. While Section 230 of the Communications Decency Act protects social media platforms from being treated as publishers or speakers of content shared by users on their apps and websites, this legislation instead focuses on the active choices these companies make when implementing algorithms designed to promote the most controversial and harmful content, which creates a general threat to public health and safety.

Senator Hoylman said: “Social media algorithms are specially programmed to spread disinformation and hate speech at the expense of the public good. The prioritization of this type of content has real life costs to public health and safety. So when social media push anti-vaccine falsehoods and help domestic terrorists plan a riot at the U.S. Capitol, they must be held accountable. Our new legislation will force social media companies to be held accountable for the dangers they promote."

For years, social companies have claimed protection from any legal consequences of their actions relating to content on their websites by hiding behind Section 230 of the Communications Decency Act. Social media websites are no longer simply a host for their users’ content, however. Many social media companies employ complex algorithms designed to put the most controversial and provocative content in front of users as much as possible. These algorithms drive engagement with their platform, keep users hooked, and increase profits. Social media companies employing these algorithms are not an impassive forum for the exchange of ideas; they are active participants in the conversation.

In October 2021, Frances Haugen, a former Facebook employee, provided shocking testimony to United State Senators alleging that the company knew of research proving that its product was harmful to teenagers but purposefully hid that research from the public. She also provided testimony that the company was willing to use hateful content to retain users on the social media website.

Social media amplification has been linked to many societal ills, including vaccine disinformation, encouragement of self-harm, bullying, and body-image issues among youth, and extremist radicalization leading to terrorist attacks like the January 6th insurrection against the U.S. Capitol.

When a website knowingly or recklessly promotes hateful or violent content, they create a threat to public health and safety. The conscious decision to elevate certain content is a separate, affirmative act from the mere hosting of information and therefore not contemplated by the protections of Section 230 of the Communications Decency Act.

This bill will provide a tool for the Attorney General, city corporation counsels, and private citizens to hold social media companies and others accountable when they promote content they know or reasonably should know the content:

- Advocates for the use of force, is directed to inciting or producing imminent lawless action, and is likely to produce such action;

- Advocates for self-harm, is directed to inciting or producing imminent self-harm, and is likely to incite or produce such action; or

- Includes a false statement of fact or fraudulent medical theory that is likely to endanger the safety or health of the public.

###

Share this Article or Press Release

Newsroom

Go to NewsroomCB6 Resolution on Sammy's Law

December 14, 2022

Parole Justice Hearing - Public Opinion S15A and S7514A

December 7, 2022